Medical imaging AI/machine learning bias awareness tool:

An interactive decision tree.

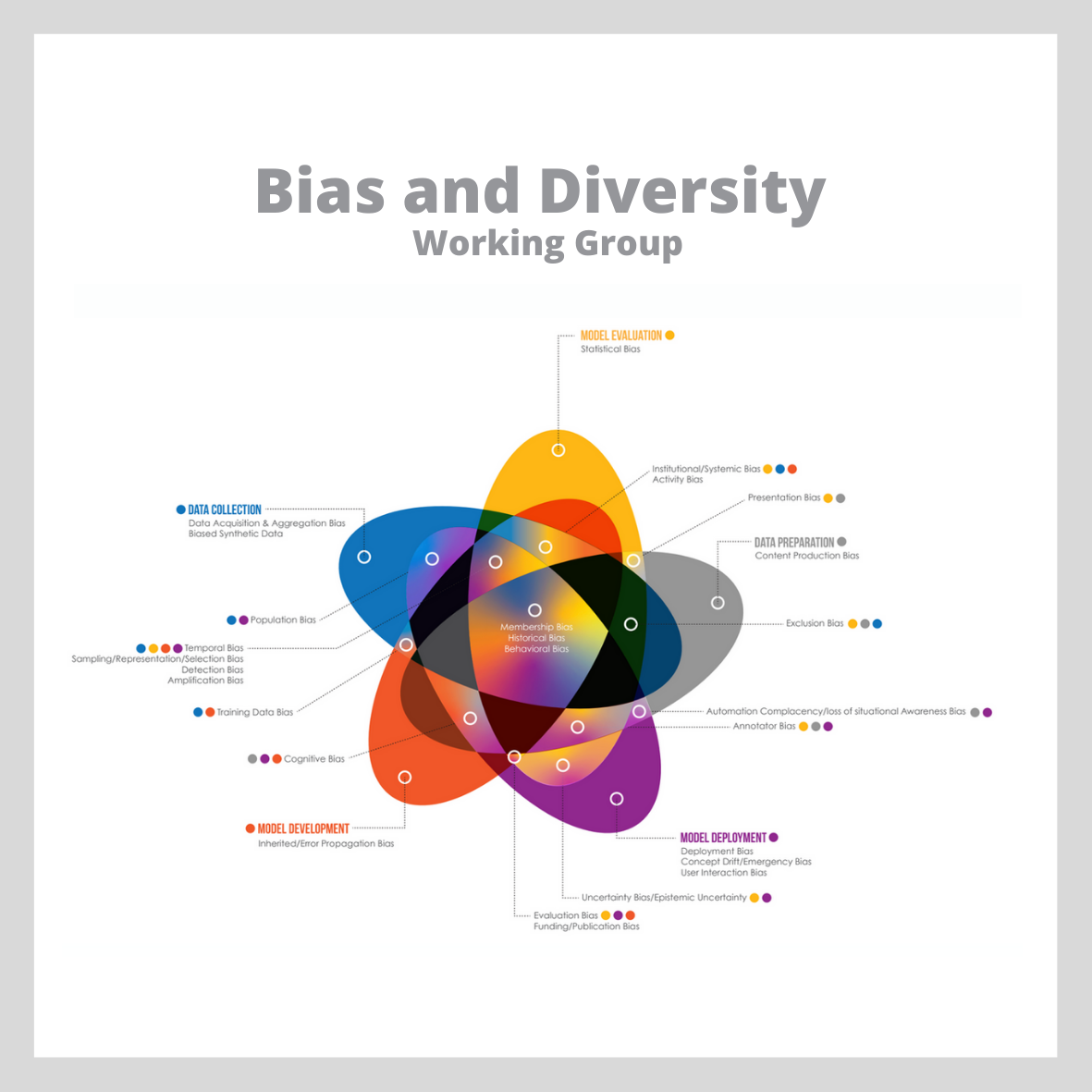

Brought to you by the MIDRC bias and diversity working group.

Last updated February 15, 2024

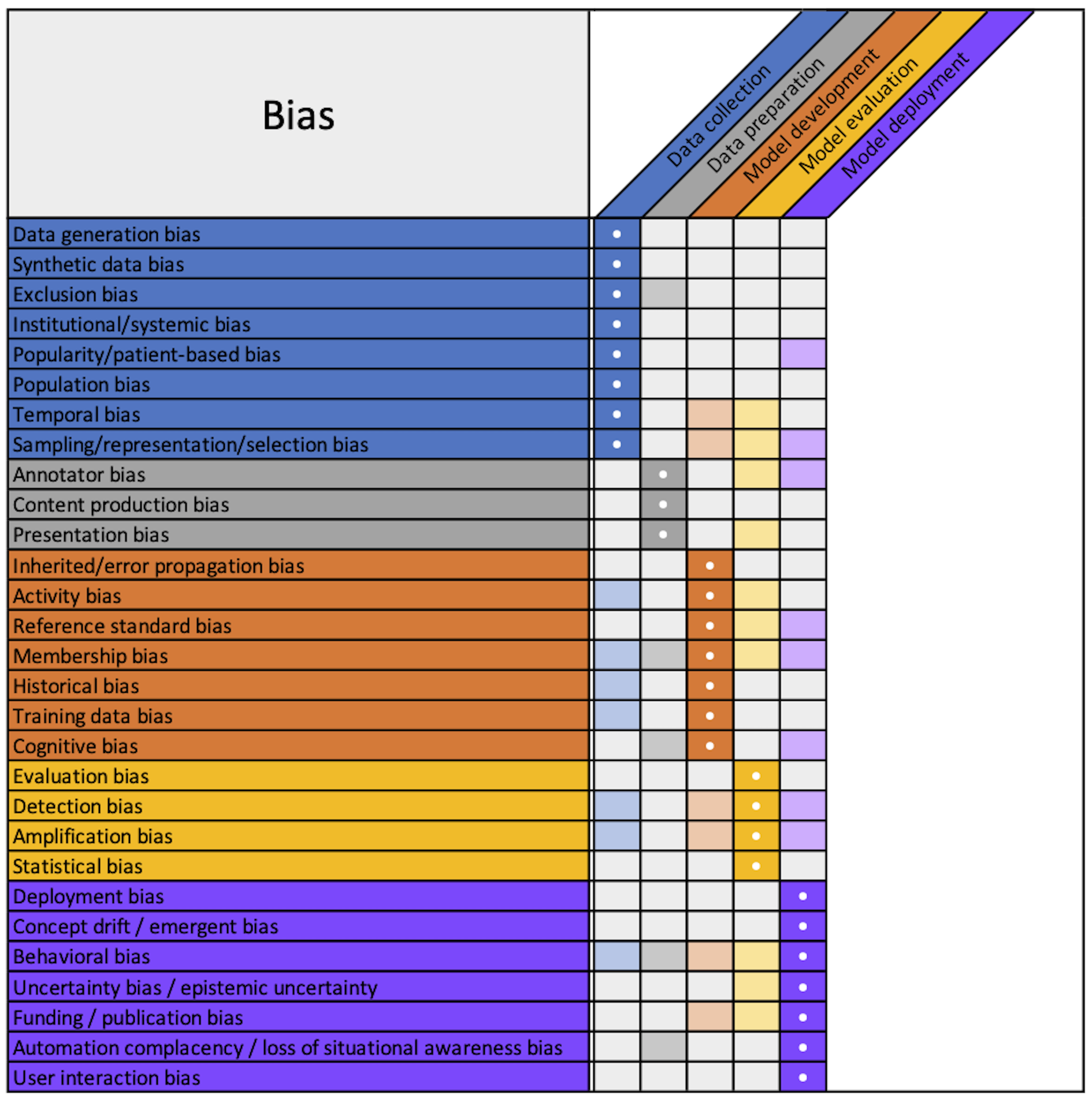

A diverse data collection and curation strategy, as well as the discovery and mitigation of bias in medical image analysis within a data commons, such as MIDRC, are critically important to yield ethical AI algorithms that produce trustworthy results for all groups. Biases can arise in many steps along the AI/machine learning (ML) pipeline, from data collection to model deployment in clinical practice. Our bias awareness tool was created to aid in identifying potential sources of bias and it provides descriptions, impacts, real-world examples, measurement methods, mitigation considerations, and literature references for the following steps along the medical image analysis AI/ML pipeline:

Data Collection

Data Preparation and Annotation

Model Development

Model Evaluation

Model Deployment

While our bias awareness tool may not provide an entirely comprehensive list, the interactive tool below includes about 30 sources of potential bias found in the 5 main steps along the AI/ML pipeline (including many biases that can impact multiple phases).

Please cite: Drukker, K., Chen, W., Gichoya, J., Gruszauskas, N., Kalpathy-Cramer, J., Koyejo, S., Myers, K., Sá, R.C., Sahiner, B., Whitney, H., Zhang, Z., and Giger ML, 2023. Toward fairness in artificial intelligence for medical image analysis: identification and mitigation of potential biases in the roadmap from data collection to model deployment. Journal of Medical Imaging, 10(6), pp.061104-061104. https://doi.org/10.1117/1.JMI.10.6.061104.

Please contact us at MIDRCbiasfeedback@aapm.org for any assistance, or if you may have further information on biases that could be included in this resource. We encourage your feedback and input!